Publications

See also online poster presentation.

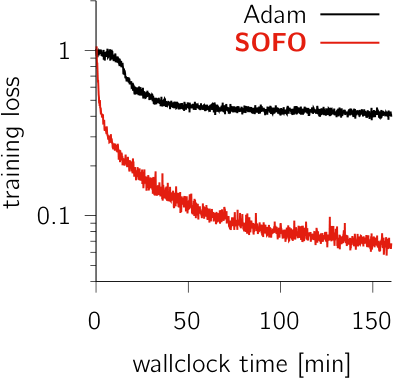

Training recurrent neural networks (RNNs) to perform neuroscience tasks can be challenging. Unlike in machine learning where any architectural modification of an RNN (e.g. GRU or LSTM) is acceptable if it facilitates training, the RNN models trained as models of brain dynamics are subject to plausibility constraints that funda- mentally exclude the usual machine learning hacks. The “vanilla” RNNs commonly used in computational neuroscience find themselves plagued by ill-conditioned loss surfaces that complicate training and significantly hinder our capacity to investigate the brain dynamics underlying complex tasks. Moreover, some tasks may require very long time horizons which backpropagation cannot handle given typical GPU memory limits. Here, we develop SOFO, a second-order optimizer that efficiently navigates loss surfaces whilst not requiring backpropagation. By relying instead on easily parallelized batched forward-mode differentiation, SOFO enjoys constant memory cost in time. Moreover, unlike most second-order optimizers which in- volve inherently sequential operations, SOFO’s effective use of GPU parallelism yields a per-iteration wallclock time essentially on par with first-order gradient- based optimizers. We show vastly superior performance compared to Adam on a number of RNN tasks, including a difficult double-reaching motor task and the learning of an adaptive Kalman filter algorithm trained over a long horizon.

Context is widely regarded as a major determinant of learning and memory across numerous domains, including classical and instrumental conditioning, episodic memory, economic decision-making, and motor learning. However, studies across these domains remain disconnected due to the lack of a unifying framework formalizing the concept of context and its role in learning. Here, we develop a unified vernacular allowing direct comparisons between different domains of contextual learning. This leads to a Bayesian model positing that context is unobserved and needs to be inferred. Contextual inference then controls the creation, expression, and updating of memories. This theoretical approach reveals two distinct components that underlie adaptation, proper and apparent learning, respectively referring to the creation and updating of memories versus time-varying adjustments in their expression. We review a number of extensions of the basic Bayesian model that allow it to account for increasingly complex forms of contextual learning.

Highlights

- Context has widespread effects on memory and learning across multiple domains.

- Context is rarely directly observed by the learner and needs to be inferred.

- Contextual inference is determined by three main factors: performance-related feedback signals, performance-unrelated sensory cues, and spontaneous factors, such as the passage of time.

- Contextual inference controls memory creation, updating, and expression across a variety of domains. In general, this requires multiple memories to be recalled and modified at any time.

- Adaptation is not a monolithic process but arises from a combination of two distinct processes: proper learning, involving the creation and updating of memories, and apparent learning, involving changes in how existing memories are expressed.

- Many classical learning phenomena, typically attributed to proper learning, have been shown to arise primarily from apparent learning.

Recent breakthroughs in artificial intelligence (AI) have enabled machines to plan in tasks previously thought to be uniquely human. Meanwhile, the planning algorithms implemented by the brain itself remain largely unknown. Here, we review neural and behavioral data in sequential decision-making tasks that elucidate the ways in which the brain does—and does not—plan. To systematically review available biological data, we create a taxonomy of planning algorithms by summarizing the relevant design choices for such algorithms in AI. Across species, recording techniques, and task paradigms, we find converging evidence that the brain represents future states consistent with a class of planning algorithms within our taxonomy—focused, depth-limited, and serial. However, we argue that current data are insufficient for addressing more detailed algorithmic questions. We propose a new approach leveraging AI advances to drive experiments that can adjudicate between competing candidate algorithms.

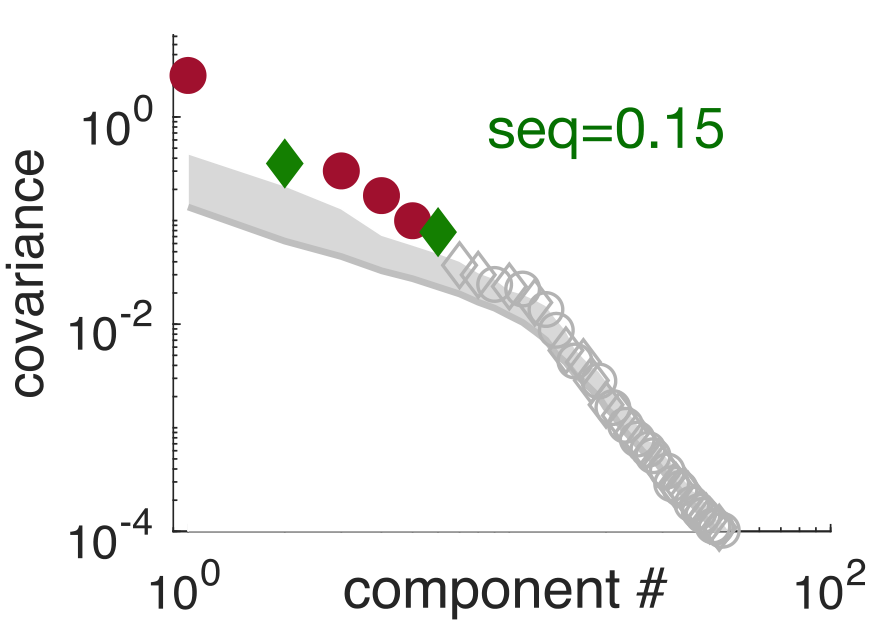

Sequential activity reflecting previously experienced temporal sequences is considered a hallmark of learning across cortical areas. However, it is unknown how cortical circuits avoid the converse problem: producing spurious sequences that are not reflecting sequences in their inputs. We develop methods to quantify and study sequentiality in neural responses. We show that recurrent circuit responses generally include spurious sequences, which are specifically prevented in circuits that obey two widely known features of cortical microcircuit organization: Dale’s law and Hebbian connectivity. In particular, spike-timing-dependent plasticity in excitation-inhibition networks leads to an adaptive erasure of spurious sequences. We tested our theory in multielectrode recordings from the visual cortex of awake ferrets. Although responses to natural stimuli were largely non-sequential, responses to artificial stimuli initially included spurious sequences, which diminished over extended exposure. These results reveal an unexpected role for Hebbian experience-dependent plasticity and Dale’s law in sensory cortical circuits.

Highlights

- Recurrent circuits generate spurious sequences without sequential inputs

- A principled measure of total sequentiality in population responses is developed

- Theory predicts that Hebbian plasticity should abolish spurious sequences

- Spurious sequences in the visual cortex diminish with experience

Perception is often described as probabilistic inference requiring an internal representation of uncertainty. However, it is unknown whether uncertainty is represented in a task-dependent manner, solely at the level of decisions, or in a fully Bayesian manner, across the entire perceptual pathway. To address this question, we first codify and evaluate the possible strategies the brain might use to represent uncertainty, and highlight the normative advantages of fully Bayesian representations. In such representations, uncertainty information is explicitly represented at all stages of processing, including early sensory areas, allowing for flexible and efficient computations in a wide variety of situations. Next, we critically review neural and behavioral evidence about the representation of uncertainty in the brain agreeing with fully Bayesian representations. We argue that sufficient behavioral evidence for fully Bayesian representations is lacking and suggest experimental approaches for demonstrating the existence of multivariate posterior distributions along the perceptual pathway.

Neural responses are variable: even under identical experimental conditions, single neuron and population responses typically differ from trial to trial and across time. Recent work has demonstrated that this variability has predictable structure, can be modulated by sensory input and behaviour, and bears critical signatures of the underlying network dynamics and computations. However, current methods for characterising neural variability are primarily geared towards sensory coding in the laboratory: they require trials with repeatable experimental stimuli and behavioural covariates. In addition, they make strong assumptions about the parametric form of variability, rely on assumption-free but data-inefficient histogram-based approaches, or are altogether ill-suited for capturing variability modulation by covariates. Here we present a universal probabilistic spike count model that eliminates these shortcomings. Our method builds on sparse Gaussian processes and can model arbitrary spike count distributions (SCDs) with flexible dependence on observed as well as latent covariates, using scalable variational inference to jointly infer the covariate-to-SCD mappings and latent trajectories in a data efficient way. Without requiring repeatable trials, it can flexibly capture covariate-dependent joint SCDs, and provide interpretable latent causes underlying the statistical dependencies between neurons. We apply the model to recordings from a canonical non-sensory neural population: head direction cells in the mouse. We find that variability in these cells defies a simple parametric relationship with mean spike count as assumed in standard models, its modulation by external covariates can be comparably strong to that of the mean firing rate, and slow low-dimensional latent factors explain away neural correlations. Our approach paves the way to understanding the mechanisms and computations underlying neural variability under naturalistic conditions, beyond the realm of sensory coding with repeatable stimuli.

Humans spend a lifetime learning, storing and refining a repertoire of motor memories. For example, through experience, we become proficient at manipulating a large range of objects with distinct dynamical properties. However, it is unknown what principle underlies how our continuous stream of sensorimotor experience is segmented into separate memories and how we adapt and use this growing repertoire. Here we develop a theory of motor learning based on the key principle that memory creation, updating and expression are all controlled by a single computation—contextual inference. Our theory reveals that adaptation can arise both by creating and updating memories (proper learning) and by changing how existing memories are differentially expressed (apparent learning). This insight enables us to account for key features of motor learning that had no unified explanation: spontaneous recovery, savings, anterograde interference, how environmental consistency affects learning rate and the distinction between explicit and implicit learning. Critically, our theory also predicts new phenomena—evoked recovery and context-dependent single-trial learning—which we confirm experimentally. These results suggest that contextual inference, rather than classical single-context mechanisms, is the key principle underlying how a diverse set of experiences is reflected in our motor behaviour.

See also commentary.

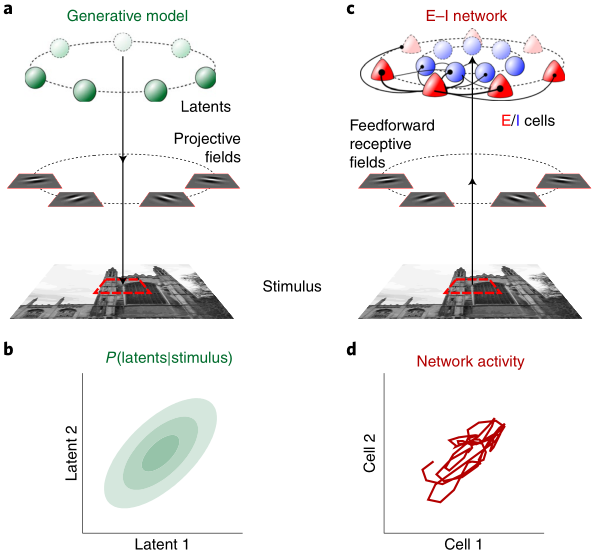

Sensory cortices display a suite of ubiquitous dynamical features, such as ongoing noise variability, transient overshoots and oscillations, that have so far escaped a common, principled theoretical account. We developed a unifying model for these phenomena by training a recurrent excitatory–inhibitory neural circuit model of a visual cortical hypercolumn to perform sampling-based probabilistic inference. The optimized network displayed several key biological properties, including divisive normalization and stimulus-modulated noise variability, inhibition-dominated transients at stimulus onset and strong gamma oscillations. These dynamical features had distinct functional roles in speeding up inferences and made predictions that we confirmed in novel analyses of recordings from awake monkeys. Our results suggest that the basic motifs of cortical dynamics emerge as a consequence of the efficient implementation of the same computational function — fast sampling-based inference — and predict further properties of these motifs that can be tested in future experiments.

The concept of objects is fundamental to cognition and is defined by a consistent set of sensory properties and physical affordances. Although it is unknown how the abstract concept of an object emerges, most accounts assume that visual or haptic boundaries are crucial in this process. Here, we tested an alternative hypothesis that boundaries are not essential but simply reflect a more fundamental principle: consistent visual or haptic statistical properties. Using a novel visuo-haptic statistical learning paradigm, we familiarised participants with objects defined solely by across-scene statistics provided either visually or through physical interactions. We then tested them on both a visual familiarity and a haptic pulling task, thus measuring both within-modality learning and across-modality generalisation. Participants showed strong within-modality learning and ‘zero-shot’ across-modality generalisation which were highly correlated. Our results demonstrate that humans can segment scenes into objects, without any explicit boundary cues, using purely statistical information.

An important computational goal of the visual system is ‘representational untangling’ (RU): representing increasingly complex features of visual scenes in an easily decodable format. RU is typically assumed to be achieved in high-level visual cortices via several stages of cortical processing. Here we show, using a canonical population coding model, that RU of low-level orientation information is already performed at the first cortical stage of visual processing, but not before that, by a fundamental cellular-level property: the thresholded firing rate nonlinearity of simple cells in the primary visual cortex (V1). We identified specific, experimentally measurable parameters that determined the optimal firing threshold for RU and found that the thresholds of V1 simple cells extracted from in vivo recordings in awake behaving mice were near optimal. These results suggest that information re-formatting, rather than maximisation, may already be a relevant computational goal for the early visual system.

Computational neuroscience is coming of age. In the not-so-distant past, data sets were small and painted a very incomplete picture of neuronal activity. This forced theoreticians to build models that were limited in scope, and often difficult to merge with theory being built from other small data sets. Computational neuroscience was happening on isolated islands across our community. We now live in an era where massive data sets are routinely collected, which often cross many spatial and temporal scales and combine both physiology and behaviour. Because of this, theoreticians now have the licence (and necessity) to think more broadly, aiming for models that capture neural activity over many scales, including behavior. In parallel, a more mature theoretical community has built solid social networks (through conferences such as the Computational Neuroscience, Computational and Systems Neuroscience, and Cognitive Computational Neuroscience conferences) that encourage a vibrant discussion across modelling traditions. All of this happens with an ever-increasing understanding of the role of computation from experimentalists (thanks, in large part, to summer courses co-training experimentalists with theoreticians, such as the Woods Hole or CAJAL courses), significantly enriching the discussion. This new world has not only deepened the computational neuroscience happening on the islands but perhaps more importantly has built bridges between them. This expansive theoretical landscape that includes expertise across mathematical and statistical communities is essential for any hope of using theory to help organize the data coming from our much bigger windows into brain activity.

This issue of Current Opinion of Neurobiology is devoted to giving a flavor of computational neuroscience being done in our time. To guide the reader we first grouped the various reviews into ones that summarize work being done on an island as well as ones describing the bridges that are being built between islands. This grouping is by no means unique and is bound to reflect the tastes of us as editors. All of the contributions have the spirit of a broad theory that attempts to encompass rich and varied neuroscience questions. We hope that these reviews inspire more bridges which will link even bigger islands.

[…]

This is an invited commentary on Ma et al., Nature Neuroscience (2006).

In 2006, Ma et al. presented an elegant theory for how populations of neurons might represent uncertainty to perform Bayesian inference. Critically, according to this theory, neural variability is no longer a nuisance, but rather a vital part of how the brain encodes probability distributions and performs computations with them.

Stochastic gradient descent (SGD) remains the method of choice for deep learning, despite the limitations arising for ill-behaved objective functions. In cases where it could be estimated, the natural gradient has proven very effective at mitigating the catastrophic effects of pathological curvature in the objective function, but little is known theoretically about its convergence properties, and it has yet to find a practical implementation that would scale to very deep and large networks. Here, we derive an exact expression for the natural gradient in deep linear networks, which exhibit pathological curvature similar to the nonlinear case. We provide for the first time an analytical solution for its convergence rate, showing that the loss decreases exponentially to the global minimum in parameter space. Our expression for the natural gradient is surprisingly simple, computationally tractable, and explains why some approximations proposed previously work well in practice. This opens new avenues for approximating the natural gradient in the nonlinear case, and we show in preliminary experiments that our online natural gradient descent outperforms SGD on MNIST autoencoding while sharing its computational simplicity.

Two theoretical ideas have emerged recently with the ambition to provide a unifying functional explanation of neural population coding and dynamics: predictive coding and Bayesian inference. Here, we describe the two theories and their combination into a single framework: Bayesian predictive coding. We clarify how the two theories can be distinguished, despite sharing core computational concepts and addressing an overlapping set of empirical phenomena. We argue that predictive coding is an algorithmic/representational motif that can serve several different computational goals of which Bayesian inference is but one. Conversely, while Bayesian inference can utilize predictive coding, it can also be realized by a variety of other representations. We critically evaluate the experimental evidence supporting Bayesian predictive coding and discuss how to test it more directly.

Behavioral and neuroscientific data on reward-based decision making point to a fundamental distinction between habitual and goal-directed action selection. The formation of habits, which requires simple updating of cached values, has been studied in great detail, and the reward prediction error theory of dopamine function has enjoyed prominent success in accounting for its neural bases. In contrast, the neural circuit mechanisms of goal-directed decision making, requiring extended iterative computations to estimate values online, are still unknown. Here we present a spiking neural network that provably solves the difficult online value estimation problem underlying goal-directed decision making in a near-optimal way and reproduces behavioral as well as neurophysiological experimental data on tasks ranging from simple binary choice to sequential decision making. Our model uses local plasticity rules to learn the synaptic weights of a simple neural network to achieve optimal performance and solves one-step decision-making tasks, commonly considered in neuroeconomics, as well as more challenging sequential decision-making tasks within 1 s. These decision times, and their parametric dependence on task parameters, as well as the final choice probabilities match behavioral data, whereas the evolution of neural activities in the network closely mimics neural responses recorded in frontal cortices during the execution of such tasks. Our theory provides a principled framework to understand the neural underpinning of goal-directed decision making and makes novel predictions for sequential decision-making tasks with multiple rewards.

Looking times (LTs) are frequently measured in empirical research on infant cognition. We analyzed the statistical distribution of LTs across participants to develop recommendations for their treatment in infancy research. Our analyses focused on a common within-subject experimental design, in which longer looking to novel or unexpected stimuli is predicted. We analyzed data from 2 sources: an in-house set of LTs that included data from individual participants (47 experiments, 1,584 observations), and a representative set of published articles reporting group-level LT statistics (149 experiments from 33 articles). We established that LTs are log-normally distributed across participants, and therefore, should always be log-transformed before parametric statistical analyses. We estimated the typical size of significant effects in LT studies, which allowed us to make recommendations about setting sample sizes. We show how our estimate of the distribution of effect sizes of LT studies can be used to design experiments to be analyzed by Bayesian statistics, where the experimenter is required to determine in advance the predicted effect size rather than the sample size. We demonstrate the robustness of this method in both sets of LT experiments.

Interpreting visual scenes typically requires us to accumulate information from multiple locations in a scene. Using a novel gaze-contingent paradigm in a visual categorization task, we show that participants’ scan paths follow an active sensing strategy that incorporates information already acquired about the scene and knowledge of the statistical structure of patterns. Intriguingly, categorization performance was markedly improved when locations were revealed to participants by an optimal Bayesian active sensor algorithm. By using a combination of a Bayesian ideal observer and the active sensor algorithm, we estimate that a major portion of this apparent suboptimality of fixation locations arises from prior biases, perceptual noise and inaccuracies in eye movements, and the central process of selecting fixation locations is around 70% efficient in our task. Our results suggest that participants select eye movements with the goal of maximizing information about abstract categories that require the integration of information from multiple locations.

A key component of interacting with the world is how to direct ones’ sensors so as to extract task-relevant information — a process referred to as active sensing. In this review, we present a framework for active sensing that forms a closed loop between an ideal observer, that extracts task-relevant information from a sequence of observations, and an ideal planner which specifies the actions that lead to the most informative observations. We discuss active sensing as an approximation to exploration in the wider framework of reinforcement learning, and conversely, discuss several sensory, perceptual, and motor processes as approximations to active sensing. Based on this framework, we introduce a taxonomy of sensing strategies, identify hallmarks of active sensing, and discuss recent advances in formalizing and quantifying active sensing.

Even in state-spaces of modest size, planning is plagued by the “curse of dimensionality”. This problem is particularly acute in human and animal cognition given the limited capacity of working memory, and the time pressures under which planning often occurs in the natural environment. Hierarchically organized modular representations have long been suggested to underlie the capacity of biological systems to efficiently and flexibly plan in complex environments. However, the principles underlying efficient modularization remain obscure, making it difficult to identify its behavioral and neural signatures. Here, we develop a normative theory of efficient state-space representations which partitions an environment into distinct modules by minimizing the average (information theoretic) description length of planning within the environment, thereby optimally trading off the complexity of planning across and within modules. We show that such optimal representations provide a unifying account for a diverse range of hitherto unrelated phenomena at multiple levels of behavior and neural representation.

Neural responses in the visual cortex are variable, and there is now an abundance of data characterizing how the magnitude and structure of this variability depends on the stimulus. Current theories of cortical computation fail to account for these data; they either ignore variability altogether or only model its unstructured Poisson-like aspects. We develop a theory in which the cortex performs probabilistic inference such that population activity patterns represent statistical samples from the inferred probability distribution. Our main prediction is that perceptual uncertainty is directly encoded by the variability, rather than the average, of cortical responses. Through direct comparisons to previously published data as well as original data analyses, we show that a sampling-based probabilistic representation accounts for the structure of noise, signal, and spontaneous response variability and correlations in the primary visual cortex. These results suggest a novel role for neural variability in cortical dynamics and computations.

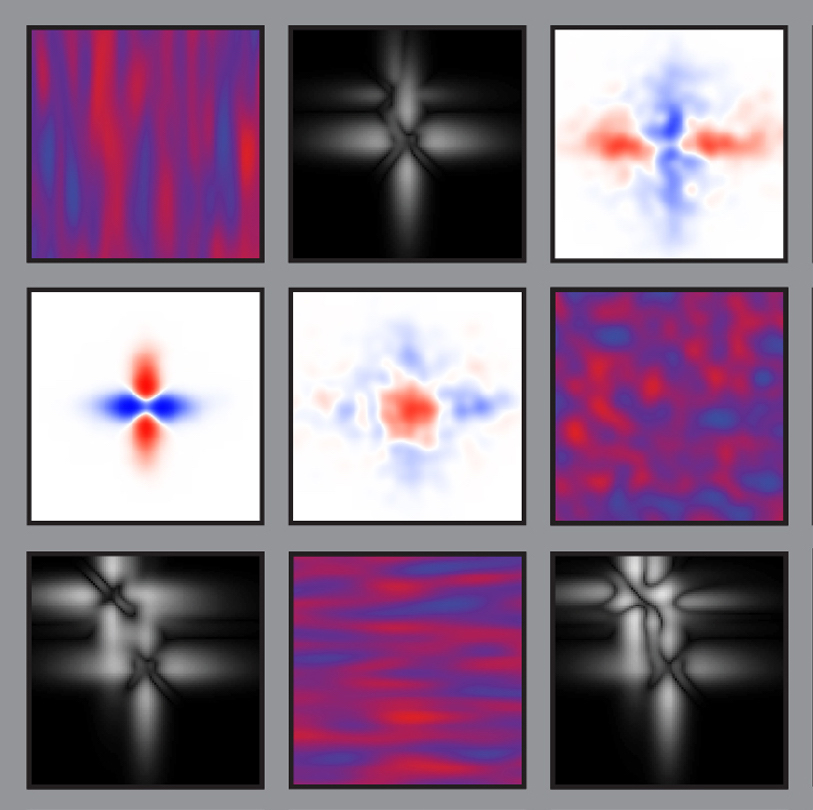

Probabilistic inference offers a principled framework for understanding both behaviour and cortical computation. However, two basic and ubiquitous properties of cortical responses seem difficult to reconcile with probabilistic inference: neural activity displays prominent oscillations in response to constant input, and large transient changes in response to stimulus onset. Indeed, cortical models of probabilistic inference have typically either concentrated on tuning curve or receptive field properties and remained agnostic as to the underlying circuit dynamics, or had simplistic dynamics that gave neither oscillations nor transients. Here we show that these dynamical behaviours may in fact be understood as hallmarks of the specific representation and algorithm that the cortex employs to perform probabilistic inference. We demonstrate that a particular family of probabilistic inference algorithms, Hamiltonian Monte Carlo (HMC), naturally maps onto the dynamics of excitatory-inhibitory neural networks. Specifically, we constructed a model of an excitatory-inhibitory circuit in primary visual cortex that performed HMC inference, and thus inherently gave rise to oscillations and transients. These oscillations were not mere epiphenomena but served an important functional role: speeding up inference by rapidly spanning a large volume of state space. Inference thus became an order of magnitude more efficient than in a non-oscillatory variant of the model. In addition, the network matched two specific properties of observed neural dynamics that would otherwise be difficult to account for using probabilistic inference. First, the frequency of oscillations as well as the magnitude of transients increased with the contrast of the image stimulus. Second, excitation and inhibition were balanced, and inhibition lagged excitation. These results suggest a new functional role for the separation of cortical populations into excitatory and inhibitory neurons, and for the neural oscillations that emerge in such excitatory-inhibitory networks: enhancing the efficiency of cortical computations.

According to the dominant view, time in perceptual decision making is used for integrating new sensory evidence. Based on a probabilistic framework, we investigated the alternative hypothesis that time is used for gradually refining an internal estimate of uncertainty, that is to obtain an increasingly accurate approximation of the posterior distribution through collecting samples from it. In the context of a simple orientation estimation task, we analytically derived predictions of how humans should behave under the two hypotheses, and identified the across-trial correlation between error and subjective uncertainty as a proper assay to distinguish between them. Next, we developed a novel experimental paradigm that could be used to reliably measure these quantities, and tested the predictions derived from the two hypotheses. We found that in our task, humans show clear evidence that they use time mostly for probabilistic sampling and not for evidence integration. These results provide the first empirical support for iteratively improving probabilistic representations in perceptual decision making, and open the way to reinterpret the role of time in the cortical processing of complex sensory information.

Cortical neurons integrate thousands of synaptic inputs in their dendrites in highly nonlinear ways. It is unknown how these dendritic nonlinearities in individual cells contribute to computations at the level of neural circuits. Here, we show that dendritic nonlinearities are critical for the efficient integration of synaptic inputs in circuits performing analog computations with spiking neurons. We developed a theory that formalizes how a neuron’s dendritic nonlinearity that is optimal for integrating synaptic inputs depends on the statistics of its presynaptic activity patterns. Based on their in vivo preynaptic population statistics (firing rates, membrane potential fluctuations, and correlations due to ensemble dynamics), our theory accurately predicted the responses of two different types of cortical pyramidal cells to patterned stimulation by two-photon glutamate uncaging. These results reveal a new computational principle underlying dendritic integration in cortical neurons by suggesting a functional link between cellular and systems–level properties of cortical circuits.

A venerable history of classical work on autoassociative memory has significantly shaped our understanding of several features of the hippocampus, and most prominently of its CA3 area, in relation to memory storage and retrieval. However, existing theories of hippocampal memory processing ignore a key biological constraint affecting memory storage in neural circuits: the bounded dynamical range of synapses. Recent treatments based on the notion of metaplasticity provide a powerful model for individual bounded synapses; however, their implications for the ability of the hippocampus to retrieve memories well and the dynamics of neurons associated with that retrieval are both unknown. Here, we develop a theoretical framework for memory storage and recall with bounded synapses. We formulate the recall of a previously stored pattern from a noisy recall cue and limited-capacity (and therefore lossy) synapses as a probabilistic inference problem, and derive neural dynamics that implement approximate inference algorithms to solve this problem efficiently. In particular, for binary synapses with metaplastic states, we demonstrate for the first time that memories can be efficiently read out with biologically plausible network dynamics that are completely constrained by the synaptic plasticity rule, and the statistics of the stored patterns and of the recall cue. Our theory organises into a coherent framework a wide range of existing data about the regulation of excitability, feedback inhibition, and network oscillations in area CA3, and makes novel and directly testable predictions that can guide future experiments.

Probabilistic inference offers a principled framework for understanding both behaviour and cortical computation. However, two basic and ubiquitous properties of cortical responses seem difficult to reconcile with probabilistic inference: neural activity displays prominent oscillations in response to constant input, and large transient changes in response to stimulus onset. Here we show that these dynamical behaviours may in fact be understood as hallmarks of the specific representation and algorithm that the cortex employs to perform probabilistic inference. We demonstrate that a particular family of probabilistic inference algorithms, Hamiltonian Monte Carlo (HMC), naturally maps onto the dynamics of excitatory-inhibitory neural networks. Specifically, we constructed a model of an excitatory-inhibitory circuit in primary visual cortex that performed HMC inference, and thus inherently gave rise to oscillations and transients. These oscillations were not mere epiphenomena but served an important functional role: speeding up inference by rapidly spanning a large volume of state space. Inference thus became an order of magnitude more efficient than in a non-oscillatory variant of the model. In addition, the network matched two specific properties of observed neural dynamics that would otherwise be difficult to account for in the context of probabilistic inference. First, the frequency of oscillations as well as the magnitude of transients increased with the contrast of the image stimulus. Second, excitation and inhibition were balanced, and inhibition lagged excitation. These results suggest a new functional role for the separation of cortical populations into excitatory and inhibitory neurons, and for the neural oscillations that emerge in such excitatory-inhibitory networks: enhancing the efficiency of cortical computations.

We study the early locust olfactory system in an attempt to explain its well-characterized structure and dynamics. We first propose its computational function as recovery of high-dimensional sparse olfactory signals from a small number of measurements. Detailed experimental knowledge about this system rules out standard algorithmic solutions to this problem. Instead, we show that solving a dual formulation of the corresponding optimisation problem yields structure and dynamics in good agreement with biological data. Further biological constraints lead us to a reduced form of this dual formulation in which the system uses independent component analysis to continuously adapt to its olfactory environment to allow accurate sparse recovery. Our work demonstrates the challenges and rewards of attempting detailed understanding of experimentally well-characterized systems.

- February 2013: Comment on Okun et al. (2012)

- March 2013: Response to the reply of Okun et al. (2013) to our comments

Humans develop rich mental representations that guide their behavior in a variety of everyday tasks. However, it is unknown whether these representations, often formalized as priors in Bayesian inference, are specific for each task or subserve multiple tasks. Current approaches cannot distinguish between these two possibilities because they cannot extract comparable representations across different tasks. Here, we develop a novel method, termed cognitive tomography, that can extract complex, multidimensional priors across tasks. We apply this method to human judgments in two qualitatively different tasks, “familiarity” and “odd one out,” involving an ecologically relevant set of stimuli, human faces. We show that priors over faces are structurally complex and vary dramatically across subjects, but are invariant across the tasks within each subject. The priors we extract from each task allow us to predict with high precision the behavior of subjects for novel stimuli both in the same task as well as in the other task. Our results provide the first evidence for a single high-dimensional structured representation of a naturalistic stimulus set that guides behavior in multiple tasks. Moreover, the representations estimated by cognitive tomography can provide independent, behavior-based regressors for elucidating the neural correlates of complex naturalistic priors.

It has long been recognised that statistical dependencies in neuronal activity need to be taken into account when decoding stimuli encoded in a neural population. Less studied, though equally pernicious, is the need to take account of dependencies between synaptic weights when decoding patterns previously encoded in an auto-associative memory. We show that activity-dependent learning generically produces such correlations, and failing to take them into account in the dynamics of memory retrieval leads to catastrophically poor recall. We derive optimal network dynamics for recall in the face of synaptic correlations caused by a range of synaptic plasticity rules. These dynamics involve well-studied circuit motifs, such as forms of feedback inhibition and experimentally observed dendritic nonlinearities. We therefore show how addressing the problem of synaptic correlations leads to a novel functional account of key biophysical features of the neural substrate.

The dendritic tree contributes significantly to the elementary computations a neuron performs while converting its synaptic inputs into action potential output. Traditionally, these computations have been characterized as both temporally and spatially localized. Under this account, neurons compute near-instantaneous mappings from their current input to their current output, brought about by somatic summation of dendritic contributions that are generated in functionally segregated compartments. However, recent evidence about the presence of oscillations in dendrites suggests a qualitatively different mode of operation: the instantaneous phase of such oscillations can depend on a long history of inputs, and, under appropriate conditions, even dendritic oscillators that are remote may interact through synchronization. Here, we develop a mathematical framework to analyze the interactions of local dendritic oscillations, and the way these interactions influ- ence single cell computations. Combining weakly coupled oscillator methods with cable theoretic arguments, we derive phase-locking states for multiple oscillating dendritic compartments. We characterize how the phase-locking properties depend on key parameters of the oscillating dendrite: the electrotonic properties of the (active) dendritic segment, and the intrinsic properties of the dendritic oscillators. As a direct consequence, we show how input to the dendrites can modulate phase-locking behavior and hence global dendritic coherence. In turn, dendritic coherence is able to gate the integration and propagation of synaptic signals to the soma, ultimately leading to an effective control of somatic spike generation. Our results suggest that dendritic oscillations enable the dendritic tree to operate on more global temporal and spatial scales than previously thought; notably that local dendritic activity may be a mechanism for generating on-going whole-cell voltage oscillations.